Converting from Denary to Binary and Hex

Check out these instructional videos:

Character Sets

Computers can't work with the letters that we use. Any letter or symbol we want to use has to be converted into a number to be processed by the computer. Tables exist that reference characters with a number - these are called character sets.

You need to know about two for the exam: ASCII and unicode

ASCII

One of the earlier number systems ASCII (American Standard Code for Information Interchange) uses 8 bits to store a number. The maximum possible combination of bits in an 8-bit system is 256 so in ASCII there are 256 characters to choose from. This is enough for English letters, symbols and other useful things.

Unicode

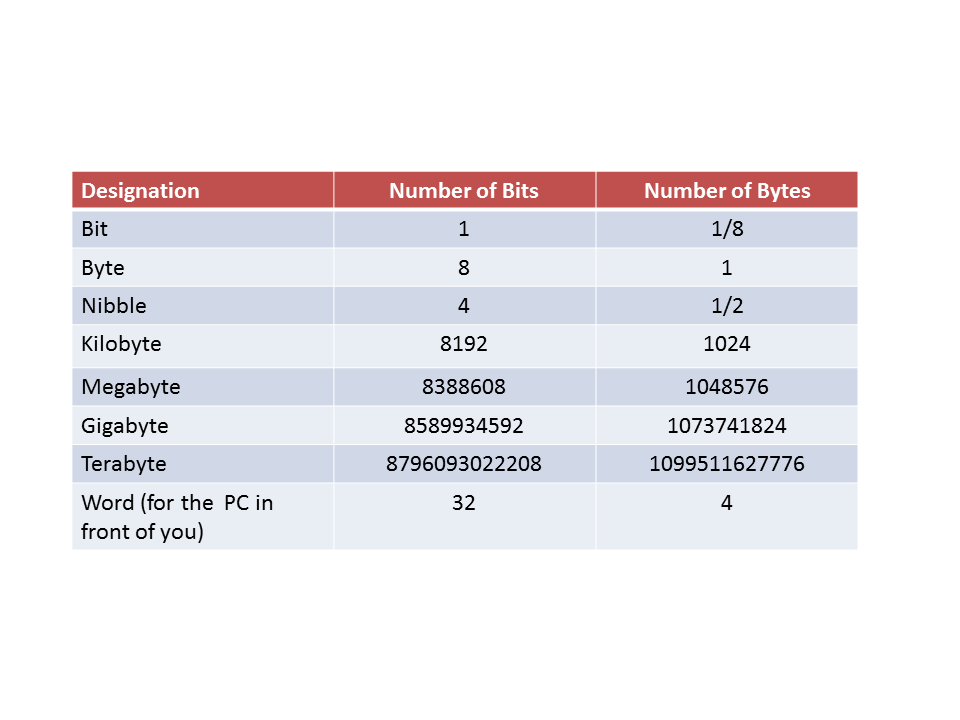

Unicode came after ASCII and it uses more bits per character. Because there are more bits then that means there is a higher number opf possible characters. Unicode has enough characters to store Chinese, Russian, Greek and all other alphabets.Binary Units

Instructions in a CPU

Very early computers were hard wired. This meant you could only feed data into them, they would perform a set calculation and if you wanted it to do something different you would need to take it to bits and then put it together in a different way.

A chap called Von Neumann change this - he invented a way to feed in data and instructions at the same time. It basically involved having a set number of bits for the data and a set number of bits to define what to do with the data.

Operand - this is the word used for the data.

Operator - this is the word used for the instructions

An example might be - a 4 bit operand that signifies the number 37 followed by a 4 bit operator to signify that this number should be added to the number already in the accumulator.